< BACK TO ALL BLOGS

What is the Necessity of Content Moderation?

June 9, 2023

The necessity of content moderation primarily stems from the following aspects:

Protecting user safety:

Content moderation helps identify and filter out harmful content such as violence,

pornography, terrorism, hate speech, cyberbullying, etc., preventing users from being negatively

influenced and ensuring a safe and healthy cyberspace.

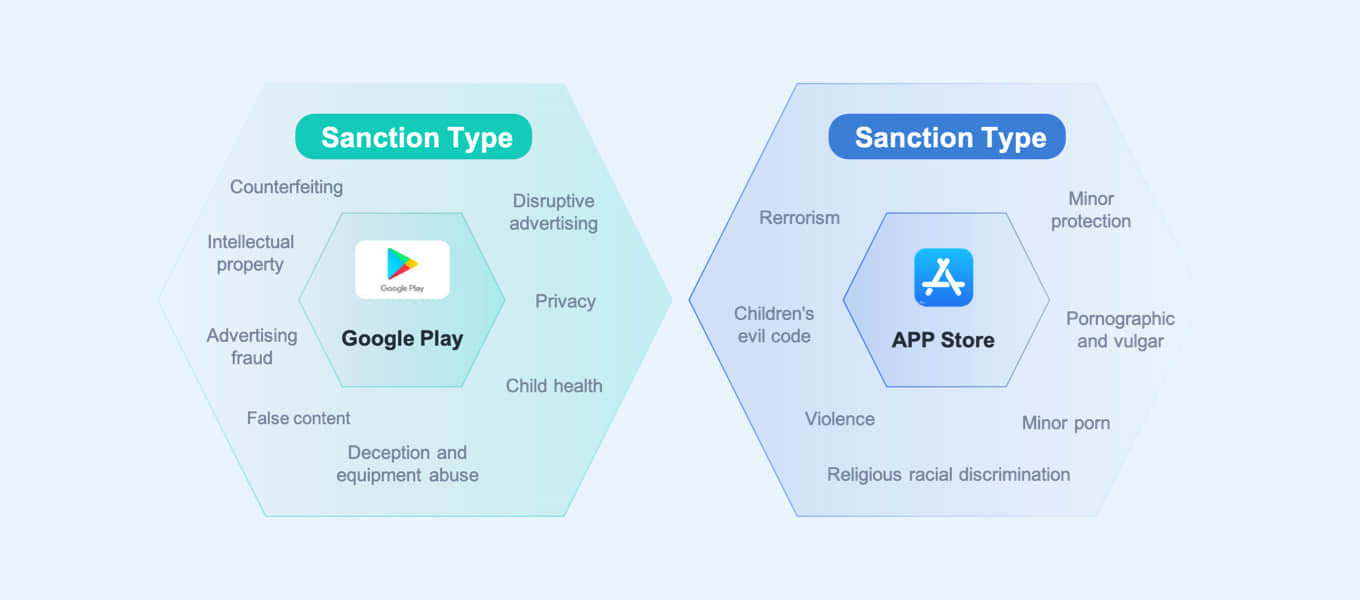

Complying with laws and regulations:

Content moderation assists online platforms and users in complying with national laws and

regulations, preventing illegal activities such as intellectual property infringement, malware

distribution, and phishing, and maintaining order in cyberspace.

Preserving brand image and reputation:

For businesses and platforms, content moderation can prevent inappropriate content from

damaging their brand image and reputation, ensuring user and customer trust in the platform

and its services.

Promoting community building:

Content moderation contributes to creating a positive and healthy community environment,

encouraging users to engage in beneficial exchanges and interactions, and improving user satisfaction

and loyalty.

Guarding against false information:

Content moderation can identify and manage false information, misleading content, and rumors,

reducing their spread on the internet and preventing misinformation and panic among the public

and society.

Protecting user privacy:

Content moderation helps prevent the leakage of user privacy information, such as personal

contact information and addresses, ensuring that users' privacy is adequately protected.

In conclusion, content moderation is of great significance in protecting users, maintaining

cyberspace order, promoting community building, and enhancing brand reputation. Through effective

content moderation, platforms and service providers can create a safer, healthier, and more orderly

online environment for users.