< BACK TO ALL BLOGS

Challenges & Solution of NSFW Moderation

July 4,2023

NSFW content moderation has become increasingly important in recent years

as social media and online communities have become ubiquitous. With the rise of

user-generated content, platforms have had to develop new moderation techniques

and technologies to manage and prevent the spread of NSFW content. This has led

to a trend towards automating moderation using advanced machine learning algorithms

and image recognition technology. However, the effectiveness of such techniques is limited,

and human moderation remains the most reliable method of preventing NSFW content from

causing harm to users and brands alike. The future of NSFW content moderation lies in a

combination of both technology and human moderation, as platforms continue to develop

new methods of keeping their users' interests and safety at the forefront of their policies.

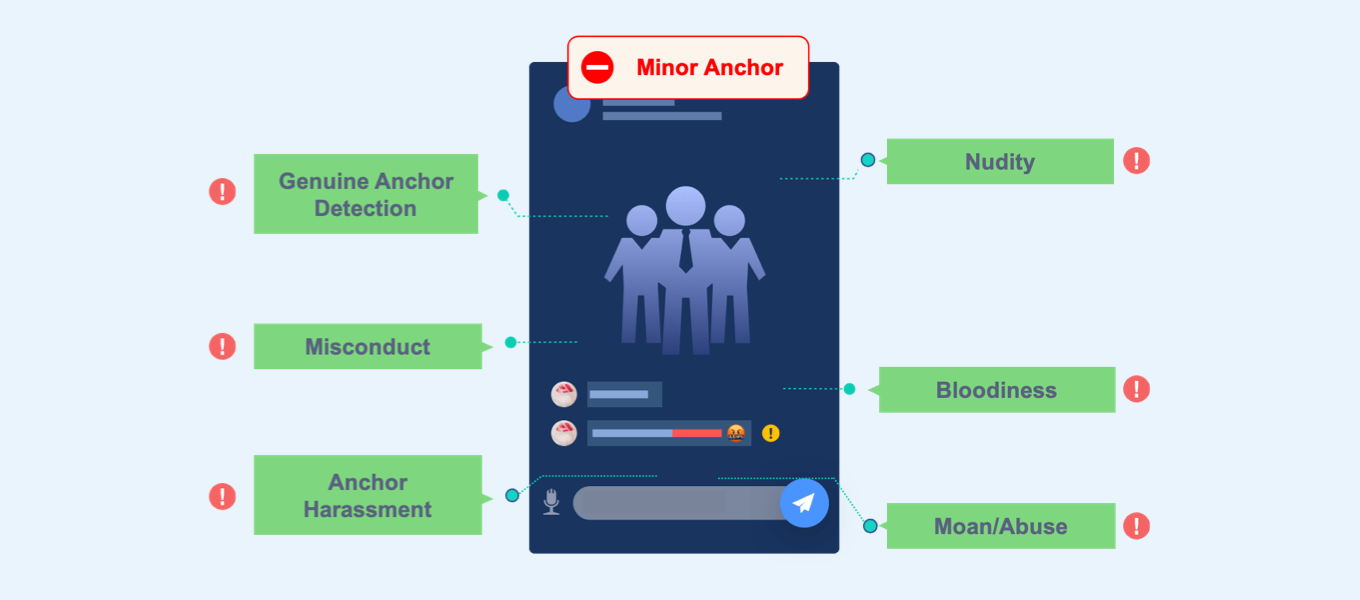

First and foremost, NSFW content comes in several different forms, such as explicit

sexual content, violent imagery, and hate speech. Each type of content carries its own

unique dangers, including the possibility of harm to individual users and brand reputation.

Social media platforms that allow explicit sexual content can lead to poor brand image, as

users may find such content offensive. NSFW content may also promote violence, harassment,

and cyberbullying, thereby posing significant risks to users.

Moderating NSFW content is a critical way to create a healthy and secure online environment.

Online platforms use various moderation techniques to prevent the proliferation of such content.

These methods range from keyword filtering and blacklists to image recognition and human moderation.

Keyword filtering and blacklists allow users to flag content based on specific parameters, while

image recognition technology identifies NSFW images as soon as they are uploaded or shared. Human

moderation is the most effective way to moderate content comprehensively, but it can also be the

costliest option.

In conclusion, NSFW content moderation is vital for creating a safe and inclusive online

environment. Online platforms must employ a range of moderation techniques to prevent such content

from spreading to their communities. With increasing advancements in moderation technology, it is

essential to adapt to new methods and techniques to detect and limit the spread of NSFW content.

Ultimately, moderation is key to ensuring that online platforms can achieve their goals while

protecting their users. NEXTDATA is a leading global risk control service provider that provides

risk content detection services, including NSFW audit services. It uses APIs to help customers

identify and intercept risk content, covering multiple fields such as social media, live streaming,

gaming, and community.

CLICK HERE>>See NEXTDATA's PRODUCT